Saturday, November 23, 2019

Three Steps To Install Angular

Step 1 - Install NodeJS

Step 2 - Install TypeScript

Open the link https://cli.angular.io/ and follow the instructions to install Angular CLI and to create your first Angular app.

- Follow the link - https://nodejs.org/en/download/

- Download the node.js installer for Windows and install it.

- Type the “npm -v” command to check the Node.js installation and version.

Step 2 - Install TypeScript

- Open the link https://www.npmjs.com/package/typescript

- Copy the above command “npm install -g typescript” and run it on command prompt.

Open the link https://cli.angular.io/ and follow the instructions to install Angular CLI and to create your first Angular app.

- Type the command “npm install -g @angular/cli” on the command prompt and press enter to install Angular cli.

- Type “ng new hello-world” and hit enter to create the Hello World app.

Once you see the message “Project ‘hello-world’” it means the app is created on the disk. - Finally, the "Hello World" Angular app is created; now type “ng serve -o”.

Thursday, November 21, 2019

Chocolatey software management automation for Windows

Chocolatey works with over 20+ installer technologies for Windows, but it can manage things you would normally xcopy deploy (like runtime binaries and zip files). You can also work with registry settings or managing files and configurations, or any combination. Since it uses PowerShell, if you can dream it, you can do it with Chocolatey.

Chocolatey builds on technologies that are familiar:

- PowerShell

- Unattended installations

Chocolatey also integrates with infrastructure management tools (like Puppet, Chef or SCCM) and other remote administration tools

Installing Chocolatey

- Chocolatey installs in seconds. You are just a few steps from running choco right now!

- Paste the copied text into your shell and press Enter.

- If you don't see any errors, you are ready to use Chocolatey! Type

chocoorchoco -?

Install with cmd.exe

@"%SystemRoot%\System32\WindowsPowerShell\v1.0\powershell.exe" -NoProfile -InputFormat None -ExecutionPolicy Bypass -Command "iex ((New-Object System.Net.WebClient).DownloadString('https://chocolatey.org/install.ps1'))" && SET "PATH=%PATH%;%ALLUSERSPROFILE%\chocolatey\bin"

@"%SystemRoot%\System32\WindowsPowerShell\v1.0\powershell.exe" -NoProfile -InputFormat None -ExecutionPolicy Bypass -Command "iex ((New-Object System.Net.WebClient).DownloadString('https://chocolatey.org/install.ps1'))" && SET "PATH=%PATH%;%ALLUSERSPROFILE%\chocolatey\bin"

Install with PowerShell.exe

Set-ExecutionPolicy Bypass -Scope Process -Force; iex ((New-Object System.Net.WebClient).DownloadString('https://chocolatey.org/install.ps1'))

Set-ExecutionPolicy Bypass -Scope Process -Force; iex ((New-Object System.Net.WebClient).DownloadString('https://chocolatey.org/install.ps1'))

Upgrading Chocolatey

choco upgrade chocolatey

Upgrading all

Wednesday, November 20, 2019

BEST PRACTICES

This is where general Docker best practices and war stories go:

- Docker Documentation

- Docker Cheat Sheet

- Best practices for writing Dockerfiles

- Dockerfile Best Practices

- Dockerfile Best Practices 2

- Building Good Docker images

- Write Excellent Dockerfiles

- 15-docker-tips-in-5-minutes

- Everyday Hacks Docker

- Docker Image Security Best Practices

- Docker Bench Security

- Docker Engine Security Cheat Sheet

- Docker Security Best Practices for Your Vessel And Container

- Docker tips tricks and tutorials

DOCKER CHEATSHEET

Docker Registries & Repositories

Login to a Registry

docker login

docker login localhost:8080

Logout from a Registry

docker logout

docker logout localhost:8080

Searching an Image

docker search nginx

docker search --filter stars=3 --no-trunc nginx

Pulling an Image

docker image pull nginx

docker image pull eon01/nginx localhost:5000/myadmin/nginx

Pushing an Image

docker image push eon01/nginx

docker image push eon01/nginx localhost:5000/myadmin/nginx

Running Containers

Create and Run a Simple Container

Creating a Container

docker container create -t -i eon01/infinite --name infinite

Running a Container

docker container run -it --name infinite -d eon01/infinite

Renaming a Container

docker container rename infinite infinity

Removing a Container

docker container rm infinite

Updating a Container

docker container update --cpu-shares 512 -m 300M infinite

Starting & Stopping Containers

Starting

docker container start nginx

Stopping

docker container stop nginx

Restarting

docker container restart nginx

Pausing

docker container pause nginx

Unpausing

docker container unpause nginx

Blocking a Container

docker container wait nginx

Sending a SIGKILL

docker container kill nginx

Sending another signal

docker container kill -s HUP nginx

Connecting to an Existing Container

docker container attach nginx

Getting Information about Containers

Running Containers

docker container ls

docker container ls -a

Container Logs

docker logs infinite

Follow Container Logs

docker container logs infinite -f

Inspecting Containers

docker container inspect infinite

docker container inspect --format '{{ .NetworkSettings.IPAddress }}' $(docker ps -q)

Containers Events

docker system events infinite

Public Ports

docker container port infinite

Running Processes

docker container top infinite

Container Resource Usage

docker container stats infinite

Inspecting changes to files or directories on a container’s filesystem

docker container diff infinite

Manipulating Images

Listing Images

docker image ls

Building Images

docker build .

docker build github.com/creack/docker-firefox

docker build - < Dockerfile

docker build - < context.tar.gz

docker build -t eon/infinite .

docker build -f myOtherDockerfile .

curl example.com/remote/Dockerfile | docker build -f - .

Removing an Image

docker image rm nginx

Loading a Tarred Repository from a File

docker image load < ubuntu.tar.gz

docker image load --input ubuntu.tar

Save an Image to a Tar Archive

docker image save busybox > ubuntu.tar

Showing the History of an Image

docker image history

Creating an Image From a Container

docker container commit nginx

Tagging an Image

docker image tag nginx eon01/nginx

Pushing an Image

docker image push eon01/nginx

Networking

Creating Networks

docker network rm MyOverlayNetwork

Listing Networks

docker network ls

Getting Information About a Network

docker network inspect MyOverlayNetwork

Connecting a Running Container to a Network

docker network connect MyOverlayNetwork nginx

Connecting a Container to a Network When it Starts

docker container run -it -d --network=MyOverlayNetwork nginx

Disconnecting a Container from a Network

docker network disconnect MyOverlayNetwork nginx

Exposing Ports

Using Dockerfile, you can expose a port on the container using:

EXPOSE

You can also map the container port to a host port using:

e.g.

docker run -p $HOST_PORT:$CONTAINER_PORT --name infinite -t infinite

Security

Guidelines for building secure Docker images

Cleaning Docker

Removing a Running Container

docker container rm nginx

Removing a Container and its Volume

docker container rm -v nginx

Removing all Exited Containers

docker container rm $(docker container ls -a -f status=exited -q)

Removing All Stopped Containers

docker container rm `docker container ls -a -q`

Removing a Docker Image

docker image rm nginx

Removing Dangling Images

docker image rm $(docker image ls -f dangling=true -q)

Removing all Images

docker image rm $(docker image ls -a -q)

Removing all untagged images

docker image rm -f $(docker image ls | grep "^" | awk "{print $3}")

Stopping & Removing all Containers

docker container stop $(docker container ls -a -q) && docker container rm $(docker container ls -a -q)

Removing Dangling Volumes

docker volume rm $(docker volume ls -f dangling=true -q)

Removing all unused (containers, images, networks and volumes)

docker system prune -f

Clean all

docker system prune -a

Docker Swarm

Initializing the Swarm

docker swarm init --advertise-addr 192.168.10.1

Getting a Worker to Join the Swarm

docker swarm join-token worker

Getting a Manager to Join the Swarm

docker swarm join-token manager

Listing Services

docker service ls

Listing nodes

docker node ls

Creating a Service

docker service create --name vote -p 8080:80 instavote/vote

Listing Swarm Tasks

docker service ps

Scaling a Service

docker service scale vote=3

Updating a Service

docker service update --image instavote/vote:movies vote

docker service update --force --update-parallelism 1 --update-delay 30s nginx

docker service update --update-parallelism 5--update-delay 2s --image instavote/vote:indent vote

docker service update --limit-cpu 2 nginx

docker service update --replicas=5 nginx

Notes:

This work was first published in Painless Docker Course

Login to a Registry

docker login

docker login localhost:8080

Logout from a Registry

docker logout

docker logout localhost:8080

Searching an Image

docker search nginx

docker search --filter stars=3 --no-trunc nginx

Pulling an Image

docker image pull nginx

docker image pull eon01/nginx localhost:5000/myadmin/nginx

Pushing an Image

docker image push eon01/nginx

docker image push eon01/nginx localhost:5000/myadmin/nginx

Running Containers

Create and Run a Simple Container

- Start an ubuntu:latest image

- Bind the port 80 from the CONTAINER to port 3000 on the HOST

- Mount the current directory to /data on the CONTAINER

- Note: on windows you have to change -v ${PWD}:/data to -v "C:\Data":/data

Creating a Container

docker container create -t -i eon01/infinite --name infinite

Running a Container

docker container run -it --name infinite -d eon01/infinite

Renaming a Container

docker container rename infinite infinity

Removing a Container

docker container rm infinite

Updating a Container

docker container update --cpu-shares 512 -m 300M infinite

Starting & Stopping Containers

Starting

docker container start nginx

Stopping

docker container stop nginx

Restarting

docker container restart nginx

Pausing

docker container pause nginx

Unpausing

docker container unpause nginx

Blocking a Container

docker container wait nginx

Sending a SIGKILL

docker container kill nginx

Sending another signal

docker container kill -s HUP nginx

Connecting to an Existing Container

docker container attach nginx

Getting Information about Containers

Running Containers

docker container ls

docker container ls -a

Container Logs

docker logs infinite

Follow Container Logs

docker container logs infinite -f

Inspecting Containers

docker container inspect infinite

docker container inspect --format '{{ .NetworkSettings.IPAddress }}' $(docker ps -q)

Containers Events

docker system events infinite

Public Ports

docker container port infinite

Running Processes

docker container top infinite

Container Resource Usage

docker container stats infinite

Inspecting changes to files or directories on a container’s filesystem

docker container diff infinite

Manipulating Images

Listing Images

docker image ls

Building Images

docker build .

docker build github.com/creack/docker-firefox

docker build - < Dockerfile

docker build - < context.tar.gz

docker build -t eon/infinite .

docker build -f myOtherDockerfile .

curl example.com/remote/Dockerfile | docker build -f - .

Removing an Image

docker image rm nginx

Loading a Tarred Repository from a File

docker image load < ubuntu.tar.gz

docker image load --input ubuntu.tar

Save an Image to a Tar Archive

docker image save busybox > ubuntu.tar

Showing the History of an Image

docker image history

Creating an Image From a Container

docker container commit nginx

Tagging an Image

docker image tag nginx eon01/nginx

Pushing an Image

docker image push eon01/nginx

Networking

Creating Networks

- docker network create -d overlay MyOverlayNetwork

- docker network create -d bridge MyBridgeNetwork

- docker network create -d overlay \ --subnet=192.168.0.0/16 \ --subnet=192.170.0.0/16 \ --gateway=192.168.0.100 \ --gateway=192.170.0.100 \ --ip-range=192.168.1.0/24 \ --aux-address="my-router=192.168.1.5" --aux-address="my-switch=192.168.1.6" \ --aux-address="my-printer=192.170.1.5" --aux-address="my-nas=192.170.1.6" \ MyOverlayNetwork

docker network rm MyOverlayNetwork

Listing Networks

docker network ls

Getting Information About a Network

docker network inspect MyOverlayNetwork

Connecting a Running Container to a Network

docker network connect MyOverlayNetwork nginx

Connecting a Container to a Network When it Starts

docker container run -it -d --network=MyOverlayNetwork nginx

Disconnecting a Container from a Network

docker network disconnect MyOverlayNetwork nginx

Exposing Ports

Using Dockerfile, you can expose a port on the container using:

EXPOSE

You can also map the container port to a host port using:

e.g.

docker run -p $HOST_PORT:$CONTAINER_PORT --name infinite -t infinite

Security

Guidelines for building secure Docker images

- Prefer minimal base images

- Dedicated user on the image as the least privileged user

- Sign and verify images to mitigate MITM attacks

- Find, fix and monitor for open source vulnerabilities

- Don’t leak sensitive information to docker images

- Use fixed tags for immutability

- Use COPY instead of ADD

- Use labels for metadata

- Use multi-stage builds for small secure images

- Use a linter

Cleaning Docker

Removing a Running Container

docker container rm nginx

Removing a Container and its Volume

docker container rm -v nginx

Removing all Exited Containers

docker container rm $(docker container ls -a -f status=exited -q)

Removing All Stopped Containers

docker container rm `docker container ls -a -q`

Removing a Docker Image

docker image rm nginx

Removing Dangling Images

docker image rm $(docker image ls -f dangling=true -q)

Removing all Images

docker image rm $(docker image ls -a -q)

Removing all untagged images

docker image rm -f $(docker image ls | grep "^" | awk "{print $3}")

Stopping & Removing all Containers

docker container stop $(docker container ls -a -q) && docker container rm $(docker container ls -a -q)

Removing Dangling Volumes

docker volume rm $(docker volume ls -f dangling=true -q)

Removing all unused (containers, images, networks and volumes)

docker system prune -f

Clean all

docker system prune -a

Docker Swarm

Initializing the Swarm

docker swarm init --advertise-addr 192.168.10.1

Getting a Worker to Join the Swarm

docker swarm join-token worker

Getting a Manager to Join the Swarm

docker swarm join-token manager

Listing Services

docker service ls

Listing nodes

docker node ls

Creating a Service

docker service create --name vote -p 8080:80 instavote/vote

Listing Swarm Tasks

docker service ps

Scaling a Service

docker service scale vote=3

Updating a Service

docker service update --image instavote/vote:movies vote

docker service update --force --update-parallelism 1 --update-delay 30s nginx

docker service update --update-parallelism 5--update-delay 2s --image instavote/vote:indent vote

docker service update --limit-cpu 2 nginx

docker service update --replicas=5 nginx

Notes:

This work was first published in Painless Docker Course

Dockerfile Instructions

FROM

Usage:

|

|

MAINTAINER

Usage:

MAINTAINER instruction allows you to set the Author field of the generated images.Reference |

|

RUN

Usage:

|

|

CMD

Usage:

|

|

LABEL

Usage:

|

|

EXPOSE

Usage:

|

|

ENV

Usage:

|

|

ADD

Usage:

|

|

COPY

Usage:

|

|

ENTRYPOINT

Usage:

|

|

VOLUME

Usage:

Reference - Best Practices |

|

USER

Usage:

USER instruction sets the user name or UID to use when running the image and for any RUN, CMD and ENTRYPOINT instructions that follow it in the Dockerfile.Reference - Best Practices |

|

WORKDIR

Usage:

|

- Sets the working directory for any

RUN,CMD,ENTRYPOINT,COPY, andADDinstructions that follow it. - It can be used multiple times in the one Dockerfile. If a relative

path is provided, it will be relative to the path of the previous

WORKDIRinstruction.

ARG

Usage:

ARG[= ]

- Defines a variable that users can pass at build-time to the builder with the

docker buildcommand using the--build-argflag.= - Multiple variables may be defined by specifying

ARGmultiple times. - It is not recommended to use build-time variables for passing secrets like github keys, user credentials, etc. Build-time variable values are visible to any user of the image with the docker history command.

- Environment variables defined using the

ENVinstruction always override anARGinstruction of the same name. - Docker has a set of predefined

ARGvariables that you can use without a corresponding ARG instruction in the Dockerfile.HTTP_PROXYandhttp_proxyHTTPS_PROXYandhttps_proxyFTP_PROXYandftp_proxyNO_PROXYandno_proxy

ONBUILD

Usage:

ONBUILD

- Adds to the image a trigger instruction to be executed at a later

time, when the image is used as the base for another build. The trigger

will be executed in the context of the downstream build, as if it had

been inserted immediately after the

FROMinstruction in the downstream Dockerfile. - Any build instruction can be registered as a trigger.

- Triggers are inherited by the "child" build only. In other words, they are not inherited by "grand-children" builds.

- The

ONBUILDinstruction may not triggerFROM,MAINTAINER, orONBUILDinstructions.

STOPSIGNAL

Usage:

Reference

STOPSIGNAL

STOPSIGNAL instruction sets the system call signal

that will be sent to the container to exit. This signal can be a valid

unsigned number that matches a position in the kernel’s syscall table,

for instance 9, or a signal name in the format SIGNAME, for instance SIGKILL.Reference

HEALTHCHECK

Usage:

HEALTHCHECK [(check container health by running a command inside the container)] CMD HEALTHCHECK NONE(disable any healthcheck inherited from the base image)

- Tells Docker how to test a container to check that it is still working

- Whenever a health check passes, it becomes

healthy. After a certain number of consecutive failures, it becomesunhealthy. - The

--interval=(default: 30s)--timeout=(default: 30s)--retries=(default: 3)

- The health check will first run

intervalseconds after the container is started, and then againintervalseconds after each previous check completes. If a single run of the check takes longer thantimeoutseconds then the check is considered to have failed. It takesretriesconsecutive failures of the health check for the container to be consideredunhealthy. - There can only be one

HEALTHCHECKinstruction in a Dockerfile. If you list more than one then only the lastHEALTHCHECKwill take effect. - The command's exit status indicates the health status of the container.

0: success - the container is healthy and ready for use1: unhealthy - the container is not working correctly2: reserved - do not use this exit code

- The first 4096 bytes of stdout and stderr from the

docker inspect. - When the health status of a container changes, a

health_statusevent is generated with the new status.

SHELL

Usage:

SHELL ["", " ", " "]

- Allows the default shell used for the shell form of commands to be overridden.

- Each

SHELLinstruction overrides all previousSHELLinstructions, and affects all subsequent instructions. - Allows an alternate shell be used such as

zsh,csh,tcsh,powershell, and others.

Tuesday, November 19, 2019

HELM CHEAT SHEET

Choco install -y kubernetes-helm :

helm repo add stable https://kubernetes-charts.storage.googleapis.com

helm repo add bitnami https://charts.bitnami.com/bitnami

helm repo update

- helm version --short

- helm search: search for charts

- helm fetch: download a chart to our local directory to view

- helm install: upload the chart to Kubernetes

- helm list: list releases of charts

helm repo add stable https://kubernetes-charts.storage.googleapis.com

helm repo add bitnami https://charts.bitnami.com/bitnami

helm repo update

Helm Search Repositories :

helm search repo bitnami/nginx

helm list

helm ls -all

Helm Install and Uninstall Package :

helm install bitnami/nginx mywebserver

helm install dashboard-demo stable/kubernetes-dashboard --set rbac.clusterAdminRole=true

helm uninstall mywebserver

helm delete mywebserver

Helm Rollback :

helm status mywebserver

helm history mywebserver

helm rollback mywebserver -1

Kubectl Help :

kubectl get svc,po,deploy

kubectl describe deployment mywebserver

kubectl get pods -l app.kubernetes.io/name=nginx

kubectl get service mywebserver-nginx -o wide

kubectl get pods -n kube-system

CLUSTERS FOR PRACTICE :

- Play with Kubernetes: A simple, interactive and fun playground to learn Kubernetes

- Katacoda’s Kubernetes Playground: no installs or setup, just tutorials on a cluster

- Minikube: local testing and getting comfortable with kubectl

- Google Kubernetes Enginer (GKE): by far the fastest way to get a multi node cluster running.

- Kelsey Hightower’s Kubernetes The Hard Way: the definitive way to learn how to set up a cluster.

- Kubernetes Examples: how to run real applications with Kubernetes

Setting Up Kubernetes (K8s) on Windows

DOWNLOAD & INSTALL :

1. Install Google Cloud SDK

2. Install kubectl

gcloud components install kubectl

3. Install minikube

Download, Rename and Copy to location

C:\ & C:\Program Files (x86)\Google\Cloud SDK\google-cloud-sdk\bin

minikube.exe

4. Start Minikube

minikube version

minikube start

minikube start --show-libmachine-logs --alsologtostderr

minikube status

minikube ssh

minikube stop

minikube delete

minikube logs

enable minikube addons:

minikube addons list

minikube addons enable dashboard

5. kubectl Helper Commands:

kubectl version -o json

kubectl get namespace

kubectl config use-context minikube

kubectl cluster-info

kubectl config view

kubectl get nodes

kubectl get all

kubectl get all -n kube-system

6. Installing K8s Dashboard using kubectl

1. Deploy the Kubernetes dashboard to your single node cluster

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

2. Create a file => dashboard-admin-sa.yaml

# ------------------- Dashboard Service Account ------------------- #

apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard-admin-sa

namespace: kube-system

---

# ------------------- Dashboard-Cluster-Role ------------------- #

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: dashboard-admin-sa

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: dashboard-admin-sa

namespace: kube-system

6. Open Cmder

7. Apply the service account and cluster role binding to your cluster

kubectl apply -f dashboard-admin-sa.yaml

8. Start the kubectl proxy

kubectl proxy

9. Get Token

kubectl get secrets -n kube-system

kubectl describe secrets dashboard-admin-sa -n kube-system

10. Run Dashboard

minikube addons open dashboard

minikube dashboard --url

curl http://127.0.0.1:8001/

http://localhost:8001/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy/

11. Delete Dasboard

kubectl get all -n kube-system

kubectl delete deployment kubernetes-dashboard --namespace kube-system

service, role, rolebinding, sa, secret

12. Choose Token

paste the

choose SIGN IN

Intro to Kubernetes

What is Kubernetes? Introduction to Kubernetes

Kubernetes is an orchestration engine and open-source platform for managing containerized application workloads and services, that facilitates both declarative configuration and automation. Kubernetes is also commonly referred as K8s.

Kubernetes Components

Web UI (Dashboard) :

Dashboard is a web-based Kubernetes user interface. You can use Dashboard to deploy containerized applications to a Kubernetes cluster, troubleshoot your containerized application, and manage the cluster itself along with its attendant resources.

Kubectl :

Kubectl is a command line configuration tool (CLI) for Kubernetes used to interact with master node of kubernetes. Kubectl has a config file called kubeconfig, this file has the information about server and authentication information to access the API Server.

Kubernetes Master :

Kubernetes Master is a main node responsible for managing the entire kubernetes clusters.

It handles the orchestration of the worker nodes.

It has three main components that take care of communication, scheduling and controllers.

API Server - Kube API Server interacts with API, Its a frontend of the kubernetes control plane.

Scheduler - Scheduler watches the pods and assigns the pods to run on specific hosts.

Kube-Controller-Manager - Controller manager runs the controllers in background which runs different tasks in Kubernetes cluster.

Some of the controllers are,

Node controller - Its responsible for noticing and responding when nodes go down.

Replication controllers - It maintains the number of pods. It controls how many identical copies of a pod should be running somewhere on the cluster

Replicasets controllers ensure number of replication of pods running at all time.

Endpoint controllers joins services and pods together.

Services account and Token controllers handles access managements.

Deployment controller provides declarative updates for pods and replicasets.

Daemon sets controller ensure all nodes run a copy of specific pods.

Jobs controller is the supervisor process for pods carrying out batch jobs

Services allow the communication.

Sateful sets specialized pod which offers ordering and uniqueness

Etcd :

etcd is a simple distribute key value store. kubernetes uses etcd as its database to store all cluster data. some of the data stored in etcd is job scheduling information, pods, state information and etc.

Worker Nodes :

Worker nodes are the nodes where the application actually running in kubernetes cluster, it is also know as minion. These each worker nodes are controlled by the master node using kubelet process.

Container Platform must be running on each worker nodes and it works together with kubelet to run the containers, This is why we use Docker engine and takes care of managing images and containers. We can also use other container platforms like CoreOS, Rocket.

Requirements of Worker Nodes:

1. kubelet must be running

2. Docker container platform

3. kube-proxy must be running

4. supervisord

Kubelet :

Kubelet is the primary node agent runs on each nodes and reads the container manifests which ensures that containers are running and healthy.

Kube-proxy :

Kube-proxy is a process helps us to have network proxy and load balancer for the services in a single worker node. It performs network routing for tcp and udp packets, and performs connection folding. Worker nodes can be exposed to internet via kube-proxy.

Pods :

A group of one or more containers deployed to a single node.

Containers in a pod share an IP Address, hostname and other resources.

Containers within the same pod have access to shared volumes.

Pods abstract network and storage away from the underlying container. This lets you move containers around the cluster more easily.

With Horizontal Pod Auto scaling, Pods of a Deployment can be automatically started and halted based on CPU usage.

Each Pod has its unique IP Address within the cluster.

Any data saved inside the Pod will disappear without a persistent storage

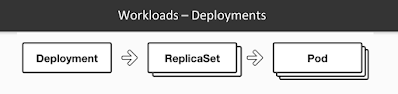

Deployment:

A deployment is a blueprint for the Pods to be created.

Handles update of its respective Pods.

A deployment will create a Pod by it’s spec from the template.

Their target is to keep the Pods running and update them (with rolling-update) in a more controlled way.

Pod(s) resource usage can be specified in the deployment.

Deployment can scale up replicas of Pods.

Service

A service is responsible for making our Pods discoverable inside the network or exposing them to the internet. A Service identifies Pods by its LabelSelector.

Types of services available:

1. ClusterIP

The deployment is only visible inside the cluster

The deployment gets an internal ClusterIP assigned to it

Traffic is load balanced between the Pods of the deployment

2. Node Port

The deployment is visible inside the cluster

The deployment is bound to a port of the Master Node

Each Node will proxy that port to your Service

The service is available at http(s)://:/

Traffic is load balanced between the Pods of the deployment

3. Load Balancer

The deployment gets a Public IP address assigned

The service is available at http(s)://:/

Traffic is load balanced between the Pods of the deployment

Hope you have got an idea about basics and introduction of kubernetes. In the next post, we have shown you How to Install & Configure Kubernetes Cluster with Docker on Linux.

<80> Also refer other articles,

Learn Kubernetes Basics Beginners Guide

How to Install Kubernetes Cluster with Docker on Linux

Create Kubernetes Deployment, Services & Pods Using Kubectl

Create Kubernetes YAML for Deployment, Service & Pods

Kubernetes is an orchestration engine and open-source platform for managing containerized application workloads and services, that facilitates both declarative configuration and automation. Kubernetes is also commonly referred as K8s.

Advantages of Kubernetes

Kubernetes can speed up the development process by making easy, automated deployments, updates (rolling-update) and by managing our apps and services with almost zero downtime. It also provides self-healing. Kubernetes can detect and restart services when a process crashes inside the container.

Kubernetes Architecture

Kubernetes Components

Dashboard is a web-based Kubernetes user interface. You can use Dashboard to deploy containerized applications to a Kubernetes cluster, troubleshoot your containerized application, and manage the cluster itself along with its attendant resources.

Kubectl :

Kubectl is a command line configuration tool (CLI) for Kubernetes used to interact with master node of kubernetes. Kubectl has a config file called kubeconfig, this file has the information about server and authentication information to access the API Server.

Kubernetes Master :

Kubernetes Master is a main node responsible for managing the entire kubernetes clusters.

It handles the orchestration of the worker nodes.

It has three main components that take care of communication, scheduling and controllers.

API Server - Kube API Server interacts with API, Its a frontend of the kubernetes control plane.

Scheduler - Scheduler watches the pods and assigns the pods to run on specific hosts.

Kube-Controller-Manager - Controller manager runs the controllers in background which runs different tasks in Kubernetes cluster.

Some of the controllers are,

Node controller - Its responsible for noticing and responding when nodes go down.

Replication controllers - It maintains the number of pods. It controls how many identical copies of a pod should be running somewhere on the cluster

Replicasets controllers ensure number of replication of pods running at all time.

Endpoint controllers joins services and pods together.

Services account and Token controllers handles access managements.

Deployment controller provides declarative updates for pods and replicasets.

Daemon sets controller ensure all nodes run a copy of specific pods.

Jobs controller is the supervisor process for pods carrying out batch jobs

Services allow the communication.

Sateful sets specialized pod which offers ordering and uniqueness

Etcd :

etcd is a simple distribute key value store. kubernetes uses etcd as its database to store all cluster data. some of the data stored in etcd is job scheduling information, pods, state information and etc.

Worker Nodes :

Worker nodes are the nodes where the application actually running in kubernetes cluster, it is also know as minion. These each worker nodes are controlled by the master node using kubelet process.

Container Platform must be running on each worker nodes and it works together with kubelet to run the containers, This is why we use Docker engine and takes care of managing images and containers. We can also use other container platforms like CoreOS, Rocket.

Requirements of Worker Nodes:

1. kubelet must be running

2. Docker container platform

3. kube-proxy must be running

4. supervisord

Kubelet :

Kubelet is the primary node agent runs on each nodes and reads the container manifests which ensures that containers are running and healthy.

Kube-proxy :

Kube-proxy is a process helps us to have network proxy and load balancer for the services in a single worker node. It performs network routing for tcp and udp packets, and performs connection folding. Worker nodes can be exposed to internet via kube-proxy.

Pods :

A group of one or more containers deployed to a single node.

Containers in a pod share an IP Address, hostname and other resources.

Containers within the same pod have access to shared volumes.

Pods abstract network and storage away from the underlying container. This lets you move containers around the cluster more easily.

With Horizontal Pod Auto scaling, Pods of a Deployment can be automatically started and halted based on CPU usage.

Each Pod has its unique IP Address within the cluster.

Any data saved inside the Pod will disappear without a persistent storage

Deployment:

A deployment is a blueprint for the Pods to be created.

Handles update of its respective Pods.

A deployment will create a Pod by it’s spec from the template.

Their target is to keep the Pods running and update them (with rolling-update) in a more controlled way.

Pod(s) resource usage can be specified in the deployment.

Deployment can scale up replicas of Pods.

Service

A service is responsible for making our Pods discoverable inside the network or exposing them to the internet. A Service identifies Pods by its LabelSelector.

Types of services available:

1. ClusterIP

The deployment is only visible inside the cluster

The deployment gets an internal ClusterIP assigned to it

Traffic is load balanced between the Pods of the deployment

2. Node Port

The deployment is visible inside the cluster

The deployment is bound to a port of the Master Node

Each Node will proxy that port to your Service

The service is available at http(s)://:/

Traffic is load balanced between the Pods of the deployment

3. Load Balancer

The deployment gets a Public IP address assigned

The service is available at http(s)://:/

Traffic is load balanced between the Pods of the deployment

Hope you have got an idea about basics and introduction of kubernetes. In the next post, we have shown you How to Install & Configure Kubernetes Cluster with Docker on Linux.

<80> Also refer other articles,

Learn Kubernetes Basics Beginners Guide

How to Install Kubernetes Cluster with Docker on Linux

Create Kubernetes Deployment, Services & Pods Using Kubectl

Create Kubernetes YAML for Deployment, Service & Pods

Monday, November 18, 2019

Saturday, November 16, 2019

GraphQL

REST is a software architecture that defines a set of constraints to be used for creating web services, Introduced in the year 2000.

Whereas GraphQL

is a data query and manipulation language for APIs, and runtime to

fulfill queries with existing data, developed by Facebook in 2011.

The following Image shows the Timeline/Journey from RPC to GraphQL

Advantages of Graphql over REST

- Resolves over-fetching and under-fetching

Apps

Using REST APIs results in over-fetching as well as under-fetching

because of the entire data in that endpoint will be returned in the JSON

format. This causes performance and scalability issues.

Whereas

GraphQL with its queries, schemas, and resolvers enable developers to

design API call only specific data requirement, This resolves

Over-fetching and Under-fetching challenges.

- Faster product Iterations on the frontend

When

designing REST APIs, It can be a bottleneck when it comes to faster or

quick iterations on the frontend. The reason behind this is because of

REST APIs design endpoints according to the views in the application.

With

GraphQL, Developers can write queries specifying their data

requirement, and the iterations for developing frontend can continue

without having to change the backend

- GraphQL enables better analytics on the backend

Apps

that use REST APIs get entire data in an endpoint, using this

application owner can’t gain insights on the usage of specific data

elements since the entire data is returned every time.

On

the other hand, GraphQL uses resolvers, and they implement particular

fields in a type. That way Application owner can track the performance

of the resolvers, and find out whether the systems need performance

tuning.

- The Advantages of the GraphQL schema

GraphQL

uses Schema Definition Language (SDL), The schema includes all the

types used in an API, It defines how a client should access data on the

server. After defining schema by the developers, Both the front and

backend teams work parallel as they know the structure of the data. This

helps to improve the productivity of the team.

Drawbacks of REST API?

The

problem with REST APIs is they have multiple endpoints. These require

round-trips to get the data. Every endpoint represents a resource, If we

need data from multiple resources, It requires multiple round-trips to

get the data. The language needed to request is very limited in the REST

API.

In REST

there is a problem of over-fetching, for example: If a client wants to

select a specific record in the resource, the REST API will always fetch

all of the fields irrespective of the client’s needs. It is over usage

of network and memory resources but both client-side as well as

server-side

Data fetching with GraphQL vs REST

The Typical usage of REST API will fetch data by calling multiple API endpoints, and the server will return all the data in those endpoints.

GraphQL uses

queries, schema, and resolvers. Developers can specify the exact data

they need, they can even create and execute nested queries.

GraphQL proves

to be very useful where it comes to fetching data that satisfies the

given condition. Example:- Instead of requesting all the students in the

school, you can specifically ask for the students of a particular

batch.

Monday, November 11, 2019

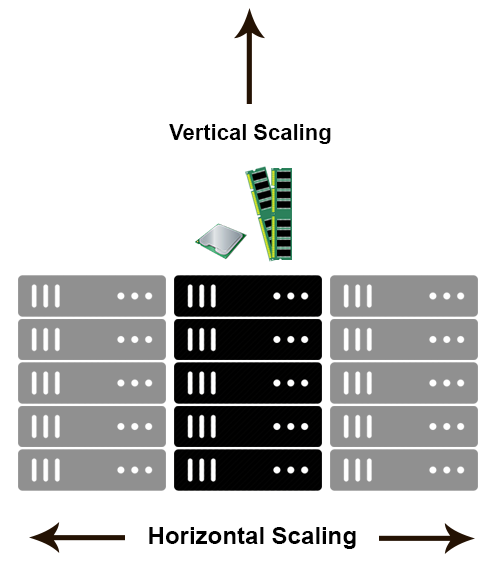

Scaling Horizontally and Vertically for Databases

Scaling Horizontally and Vertically for Databases

Horizontal scaling means that you scale by adding more machines into your pool of resources whereas Vertical scaling means that you scale by adding more power (CPU, RAM) to an existing machine.

An easy way to remember this is to think of a machine on a server rack, we add more machines across the horizontal direction and add more resources to a machine in the vertical direction.

In

a database world

Horizontal-scaling is often based on the partitioning of the data i.e. each node contains only part of the data, in

Vertical-scaling the data resides on a single node and scaling is done through multi-core i.e. spreading the load between the CPU and RAM resources of that machine.

Horizontal-scaling is often based on the partitioning of the data i.e. each node contains only part of the data, in

Vertical-scaling the data resides on a single node and scaling is done through multi-core i.e. spreading the load between the CPU and RAM resources of that machine.

With horizontal-scaling it is often easier to scale dynamically by adding more machines into the existing pool,

Vertical-scaling is often limited to the capacity of a single machine, scaling beyond that capacity often involves downtime and comes with an upper limit.

Good examples of horizontal scaling are Cassandra, MongoDB, Google Cloud Spanner .. and a

Good example of vertical scaling is MySQL — Amazon RDS (The cloud version of MySQL). It provides an easy way to scale vertically by switching from small to bigger machines. This process often involves downtime.

In-Memory Data Grids such as GigaSpaces XAP, Coherence

etc.. are often optimized for both horizontal and vertical scaling

simply because they’re not bound to disk.

Horizontal-scaling through partitioning and vertical-scaling through multi-core support.

Horizontal-scaling through partitioning and vertical-scaling through multi-core support.

You can read more on this subject in my earlier posts: Scale-out vs Scale-up and The Common Principles Behind the NOSQL Alternatives

Friday, November 1, 2019

Docker Commands

Set Environment Settings:

- DOCKER_CERT_PATH=C:\Users\jini\.docker\machine\certs

- DOCKER_HOST=tcp://192.168.99.100:2376

- DOCKER_TLS_VERIFY=1

- DOCKER_TOOLBOX_INSTALL_PATH= C:\Program Files\Docker Toolbox

Docker Lifecycle:

docker runcreates a container.docker stopstops it.docker startwill start it again.docker restartrestarts a container.docker rmdeletes a container.docker killsends a SIGKILL to a container.docker attachwill connect to a running container.docker waitblocks until container stops.

docker start then docker attach to get in. If you want to poke around in an image,

docker run -t -i to open a tty.Docker Info:

docker ps-a shows running and stopped containers.docker inspectlooks at all the info on a container (including IP address).docker logsgets logs from container.docker eventsgets events from container.docker portshows public facing port of container.docker topshows running processes in container.docker diffshows changed files in the container’s FS.

Docker Images/Container Lifecycle:

docker imagesshows all images.docker importcreates an image from a tarball.docker buildcreates image from Dockerfile.docker commitcreates image from a container.docker rmiremoves an image.docker insertinserts a file from URL into image. (kind of odd, you’d think images would be immutable after create)docker loadloads an image from a tar archive as STDIN, including images and tags (as of 0.7).docker savesaves an image to a tar archive stream to STDOUT with all parent layers, tags & versions (as of 0.7).

Info

docker historyshows history of image.docker tagtags an image to a name (local or registry).

Docker Compose

Define and run multi-container applications with Docker.docker-compose --helpcreate docker-compose.yml

version: '3'

services:

eureka:

restart: always

build: ./micro1-eureka-server

ports:

- "8761:8761"docker-compose stop

docker-compose rm -f

docker-compose build

docker-compose up -d

docker-compose start

docker-compose psScaling containers running a given service

docker-compose scale eureka=3Healing, i.e., re-running containers that have stopped

docker-compose up --no-recreate

Docker Hub

Docker.io hosts its own index to a central registry which contains a large number of repositories.docker loginto login to a registry.docker searchsearches registry for image.docker pullpulls an image from registry to local machine.docker pushpushes an image to the registry from local machine.

Dockerfile

Instructions

- .dockerignore

- FROM Sets the Base Image for subsequent instructions.

- MAINTAINER (deprecated - use LABEL instead)

- RUN execute any commands in a new layer on top of the current image

- CMD provide defaults for an executing container.

- EXPOSE informs Docker that the container listens on the specified network ports at runtime.

- ENV sets environment variable.

- ADD copies new files, directories or remote file to container. Invalidates caches.

Avoid ADD and use COPY instead. - COPY copies new files or directories to container. By default this copies as root regardless of the USER/WORKDIR settings. Use --chown=

: to give ownership to another user/group. (Same for ADD.) - ENTRYPOINT configures a container that will run as an executable.

- VOLUME creates a mount point for externally mounted volumes or other containers.

- USER sets the user name for following RUN / CMD / ENTRYPOINT commands.

- WORKDIR sets the working directory.

- ARG defines a build-time variable.

- ONBUILD adds a trigger instruction when the image is used as the base for another build.

- STOPSIGNAL sets the system call signal that will be sent to the container to exit.

- LABEL apply key/value metadata to your images, containers, or daemons.

Tutorial: Flux7’s Dockerfile Tutorial

Examples: Examples

Best Practices: Best to look at http://github.com/wsargent/docker-devenv and the best practices / take 2 for more details.

Volumes:

Docker volumes are free-floating filesystems. They don’t have to be connected to a particular container.

Volumes are useful in situations where you can’t use links (which are TCP/IP only). For instance, if you need to have two docker instances communicate by leaving stuff on the filesystem.

You can mount them in several docker containers at once, using docker run -volume-from

Get Environment Settings

docker run --rm ubuntu env

Delete old containers

docker ps -a | grep 'weeks ago' | awk '{print $1}' | xargs docker rm

Delete stopped containers

docker rm `docker ps -a -q`

Show image dependencies

docker images -viz | dot -Tpng -o docker.png

Original

https://github.com/wsargent/docker-cheat-sheet/blob/master/README.md

Subscribe to:

Posts (Atom)