Showing posts with label Z3_Docker. Show all posts

Showing posts with label Z3_Docker. Show all posts

Thursday, October 1, 2020

Wednesday, November 20, 2019

BEST PRACTICES

This is where general Docker best practices and war stories go:

- Docker Documentation

- Docker Cheat Sheet

- Best practices for writing Dockerfiles

- Dockerfile Best Practices

- Dockerfile Best Practices 2

- Building Good Docker images

- Write Excellent Dockerfiles

- 15-docker-tips-in-5-minutes

- Everyday Hacks Docker

- Docker Image Security Best Practices

- Docker Bench Security

- Docker Engine Security Cheat Sheet

- Docker Security Best Practices for Your Vessel And Container

- Docker tips tricks and tutorials

DOCKER CHEATSHEET

Docker Registries & Repositories

Login to a Registry

docker login

docker login localhost:8080

Logout from a Registry

docker logout

docker logout localhost:8080

Searching an Image

docker search nginx

docker search --filter stars=3 --no-trunc nginx

Pulling an Image

docker image pull nginx

docker image pull eon01/nginx localhost:5000/myadmin/nginx

Pushing an Image

docker image push eon01/nginx

docker image push eon01/nginx localhost:5000/myadmin/nginx

Running Containers

Create and Run a Simple Container

Creating a Container

docker container create -t -i eon01/infinite --name infinite

Running a Container

docker container run -it --name infinite -d eon01/infinite

Renaming a Container

docker container rename infinite infinity

Removing a Container

docker container rm infinite

Updating a Container

docker container update --cpu-shares 512 -m 300M infinite

Starting & Stopping Containers

Starting

docker container start nginx

Stopping

docker container stop nginx

Restarting

docker container restart nginx

Pausing

docker container pause nginx

Unpausing

docker container unpause nginx

Blocking a Container

docker container wait nginx

Sending a SIGKILL

docker container kill nginx

Sending another signal

docker container kill -s HUP nginx

Connecting to an Existing Container

docker container attach nginx

Getting Information about Containers

Running Containers

docker container ls

docker container ls -a

Container Logs

docker logs infinite

Follow Container Logs

docker container logs infinite -f

Inspecting Containers

docker container inspect infinite

docker container inspect --format '{{ .NetworkSettings.IPAddress }}' $(docker ps -q)

Containers Events

docker system events infinite

Public Ports

docker container port infinite

Running Processes

docker container top infinite

Container Resource Usage

docker container stats infinite

Inspecting changes to files or directories on a container’s filesystem

docker container diff infinite

Manipulating Images

Listing Images

docker image ls

Building Images

docker build .

docker build github.com/creack/docker-firefox

docker build - < Dockerfile

docker build - < context.tar.gz

docker build -t eon/infinite .

docker build -f myOtherDockerfile .

curl example.com/remote/Dockerfile | docker build -f - .

Removing an Image

docker image rm nginx

Loading a Tarred Repository from a File

docker image load < ubuntu.tar.gz

docker image load --input ubuntu.tar

Save an Image to a Tar Archive

docker image save busybox > ubuntu.tar

Showing the History of an Image

docker image history

Creating an Image From a Container

docker container commit nginx

Tagging an Image

docker image tag nginx eon01/nginx

Pushing an Image

docker image push eon01/nginx

Networking

Creating Networks

docker network rm MyOverlayNetwork

Listing Networks

docker network ls

Getting Information About a Network

docker network inspect MyOverlayNetwork

Connecting a Running Container to a Network

docker network connect MyOverlayNetwork nginx

Connecting a Container to a Network When it Starts

docker container run -it -d --network=MyOverlayNetwork nginx

Disconnecting a Container from a Network

docker network disconnect MyOverlayNetwork nginx

Exposing Ports

Using Dockerfile, you can expose a port on the container using:

EXPOSE

You can also map the container port to a host port using:

e.g.

docker run -p $HOST_PORT:$CONTAINER_PORT --name infinite -t infinite

Security

Guidelines for building secure Docker images

Cleaning Docker

Removing a Running Container

docker container rm nginx

Removing a Container and its Volume

docker container rm -v nginx

Removing all Exited Containers

docker container rm $(docker container ls -a -f status=exited -q)

Removing All Stopped Containers

docker container rm `docker container ls -a -q`

Removing a Docker Image

docker image rm nginx

Removing Dangling Images

docker image rm $(docker image ls -f dangling=true -q)

Removing all Images

docker image rm $(docker image ls -a -q)

Removing all untagged images

docker image rm -f $(docker image ls | grep "^" | awk "{print $3}")

Stopping & Removing all Containers

docker container stop $(docker container ls -a -q) && docker container rm $(docker container ls -a -q)

Removing Dangling Volumes

docker volume rm $(docker volume ls -f dangling=true -q)

Removing all unused (containers, images, networks and volumes)

docker system prune -f

Clean all

docker system prune -a

Docker Swarm

Initializing the Swarm

docker swarm init --advertise-addr 192.168.10.1

Getting a Worker to Join the Swarm

docker swarm join-token worker

Getting a Manager to Join the Swarm

docker swarm join-token manager

Listing Services

docker service ls

Listing nodes

docker node ls

Creating a Service

docker service create --name vote -p 8080:80 instavote/vote

Listing Swarm Tasks

docker service ps

Scaling a Service

docker service scale vote=3

Updating a Service

docker service update --image instavote/vote:movies vote

docker service update --force --update-parallelism 1 --update-delay 30s nginx

docker service update --update-parallelism 5--update-delay 2s --image instavote/vote:indent vote

docker service update --limit-cpu 2 nginx

docker service update --replicas=5 nginx

Notes:

This work was first published in Painless Docker Course

Login to a Registry

docker login

docker login localhost:8080

Logout from a Registry

docker logout

docker logout localhost:8080

Searching an Image

docker search nginx

docker search --filter stars=3 --no-trunc nginx

Pulling an Image

docker image pull nginx

docker image pull eon01/nginx localhost:5000/myadmin/nginx

Pushing an Image

docker image push eon01/nginx

docker image push eon01/nginx localhost:5000/myadmin/nginx

Running Containers

Create and Run a Simple Container

- Start an ubuntu:latest image

- Bind the port 80 from the CONTAINER to port 3000 on the HOST

- Mount the current directory to /data on the CONTAINER

- Note: on windows you have to change -v ${PWD}:/data to -v "C:\Data":/data

Creating a Container

docker container create -t -i eon01/infinite --name infinite

Running a Container

docker container run -it --name infinite -d eon01/infinite

Renaming a Container

docker container rename infinite infinity

Removing a Container

docker container rm infinite

Updating a Container

docker container update --cpu-shares 512 -m 300M infinite

Starting & Stopping Containers

Starting

docker container start nginx

Stopping

docker container stop nginx

Restarting

docker container restart nginx

Pausing

docker container pause nginx

Unpausing

docker container unpause nginx

Blocking a Container

docker container wait nginx

Sending a SIGKILL

docker container kill nginx

Sending another signal

docker container kill -s HUP nginx

Connecting to an Existing Container

docker container attach nginx

Getting Information about Containers

Running Containers

docker container ls

docker container ls -a

Container Logs

docker logs infinite

Follow Container Logs

docker container logs infinite -f

Inspecting Containers

docker container inspect infinite

docker container inspect --format '{{ .NetworkSettings.IPAddress }}' $(docker ps -q)

Containers Events

docker system events infinite

Public Ports

docker container port infinite

Running Processes

docker container top infinite

Container Resource Usage

docker container stats infinite

Inspecting changes to files or directories on a container’s filesystem

docker container diff infinite

Manipulating Images

Listing Images

docker image ls

Building Images

docker build .

docker build github.com/creack/docker-firefox

docker build - < Dockerfile

docker build - < context.tar.gz

docker build -t eon/infinite .

docker build -f myOtherDockerfile .

curl example.com/remote/Dockerfile | docker build -f - .

Removing an Image

docker image rm nginx

Loading a Tarred Repository from a File

docker image load < ubuntu.tar.gz

docker image load --input ubuntu.tar

Save an Image to a Tar Archive

docker image save busybox > ubuntu.tar

Showing the History of an Image

docker image history

Creating an Image From a Container

docker container commit nginx

Tagging an Image

docker image tag nginx eon01/nginx

Pushing an Image

docker image push eon01/nginx

Networking

Creating Networks

- docker network create -d overlay MyOverlayNetwork

- docker network create -d bridge MyBridgeNetwork

- docker network create -d overlay \ --subnet=192.168.0.0/16 \ --subnet=192.170.0.0/16 \ --gateway=192.168.0.100 \ --gateway=192.170.0.100 \ --ip-range=192.168.1.0/24 \ --aux-address="my-router=192.168.1.5" --aux-address="my-switch=192.168.1.6" \ --aux-address="my-printer=192.170.1.5" --aux-address="my-nas=192.170.1.6" \ MyOverlayNetwork

docker network rm MyOverlayNetwork

Listing Networks

docker network ls

Getting Information About a Network

docker network inspect MyOverlayNetwork

Connecting a Running Container to a Network

docker network connect MyOverlayNetwork nginx

Connecting a Container to a Network When it Starts

docker container run -it -d --network=MyOverlayNetwork nginx

Disconnecting a Container from a Network

docker network disconnect MyOverlayNetwork nginx

Exposing Ports

Using Dockerfile, you can expose a port on the container using:

EXPOSE

You can also map the container port to a host port using:

e.g.

docker run -p $HOST_PORT:$CONTAINER_PORT --name infinite -t infinite

Security

Guidelines for building secure Docker images

- Prefer minimal base images

- Dedicated user on the image as the least privileged user

- Sign and verify images to mitigate MITM attacks

- Find, fix and monitor for open source vulnerabilities

- Don’t leak sensitive information to docker images

- Use fixed tags for immutability

- Use COPY instead of ADD

- Use labels for metadata

- Use multi-stage builds for small secure images

- Use a linter

Cleaning Docker

Removing a Running Container

docker container rm nginx

Removing a Container and its Volume

docker container rm -v nginx

Removing all Exited Containers

docker container rm $(docker container ls -a -f status=exited -q)

Removing All Stopped Containers

docker container rm `docker container ls -a -q`

Removing a Docker Image

docker image rm nginx

Removing Dangling Images

docker image rm $(docker image ls -f dangling=true -q)

Removing all Images

docker image rm $(docker image ls -a -q)

Removing all untagged images

docker image rm -f $(docker image ls | grep "^" | awk "{print $3}")

Stopping & Removing all Containers

docker container stop $(docker container ls -a -q) && docker container rm $(docker container ls -a -q)

Removing Dangling Volumes

docker volume rm $(docker volume ls -f dangling=true -q)

Removing all unused (containers, images, networks and volumes)

docker system prune -f

Clean all

docker system prune -a

Docker Swarm

Initializing the Swarm

docker swarm init --advertise-addr 192.168.10.1

Getting a Worker to Join the Swarm

docker swarm join-token worker

Getting a Manager to Join the Swarm

docker swarm join-token manager

Listing Services

docker service ls

Listing nodes

docker node ls

Creating a Service

docker service create --name vote -p 8080:80 instavote/vote

Listing Swarm Tasks

docker service ps

Scaling a Service

docker service scale vote=3

Updating a Service

docker service update --image instavote/vote:movies vote

docker service update --force --update-parallelism 1 --update-delay 30s nginx

docker service update --update-parallelism 5--update-delay 2s --image instavote/vote:indent vote

docker service update --limit-cpu 2 nginx

docker service update --replicas=5 nginx

Notes:

This work was first published in Painless Docker Course

Dockerfile Instructions

FROM

Usage:

|

|

MAINTAINER

Usage:

MAINTAINER instruction allows you to set the Author field of the generated images.Reference |

|

RUN

Usage:

|

|

CMD

Usage:

|

|

LABEL

Usage:

|

|

EXPOSE

Usage:

|

|

ENV

Usage:

|

|

ADD

Usage:

|

|

COPY

Usage:

|

|

ENTRYPOINT

Usage:

|

|

VOLUME

Usage:

Reference - Best Practices |

|

USER

Usage:

USER instruction sets the user name or UID to use when running the image and for any RUN, CMD and ENTRYPOINT instructions that follow it in the Dockerfile.Reference - Best Practices |

|

WORKDIR

Usage:

|

- Sets the working directory for any

RUN,CMD,ENTRYPOINT,COPY, andADDinstructions that follow it. - It can be used multiple times in the one Dockerfile. If a relative

path is provided, it will be relative to the path of the previous

WORKDIRinstruction.

ARG

Usage:

ARG[= ]

- Defines a variable that users can pass at build-time to the builder with the

docker buildcommand using the--build-argflag.= - Multiple variables may be defined by specifying

ARGmultiple times. - It is not recommended to use build-time variables for passing secrets like github keys, user credentials, etc. Build-time variable values are visible to any user of the image with the docker history command.

- Environment variables defined using the

ENVinstruction always override anARGinstruction of the same name. - Docker has a set of predefined

ARGvariables that you can use without a corresponding ARG instruction in the Dockerfile.HTTP_PROXYandhttp_proxyHTTPS_PROXYandhttps_proxyFTP_PROXYandftp_proxyNO_PROXYandno_proxy

ONBUILD

Usage:

ONBUILD

- Adds to the image a trigger instruction to be executed at a later

time, when the image is used as the base for another build. The trigger

will be executed in the context of the downstream build, as if it had

been inserted immediately after the

FROMinstruction in the downstream Dockerfile. - Any build instruction can be registered as a trigger.

- Triggers are inherited by the "child" build only. In other words, they are not inherited by "grand-children" builds.

- The

ONBUILDinstruction may not triggerFROM,MAINTAINER, orONBUILDinstructions.

STOPSIGNAL

Usage:

Reference

STOPSIGNAL

STOPSIGNAL instruction sets the system call signal

that will be sent to the container to exit. This signal can be a valid

unsigned number that matches a position in the kernel’s syscall table,

for instance 9, or a signal name in the format SIGNAME, for instance SIGKILL.Reference

HEALTHCHECK

Usage:

HEALTHCHECK [(check container health by running a command inside the container)] CMD HEALTHCHECK NONE(disable any healthcheck inherited from the base image)

- Tells Docker how to test a container to check that it is still working

- Whenever a health check passes, it becomes

healthy. After a certain number of consecutive failures, it becomesunhealthy. - The

--interval=(default: 30s)--timeout=(default: 30s)--retries=(default: 3)

- The health check will first run

intervalseconds after the container is started, and then againintervalseconds after each previous check completes. If a single run of the check takes longer thantimeoutseconds then the check is considered to have failed. It takesretriesconsecutive failures of the health check for the container to be consideredunhealthy. - There can only be one

HEALTHCHECKinstruction in a Dockerfile. If you list more than one then only the lastHEALTHCHECKwill take effect. - The command's exit status indicates the health status of the container.

0: success - the container is healthy and ready for use1: unhealthy - the container is not working correctly2: reserved - do not use this exit code

- The first 4096 bytes of stdout and stderr from the

docker inspect. - When the health status of a container changes, a

health_statusevent is generated with the new status.

SHELL

Usage:

SHELL ["", " ", " "]

- Allows the default shell used for the shell form of commands to be overridden.

- Each

SHELLinstruction overrides all previousSHELLinstructions, and affects all subsequent instructions. - Allows an alternate shell be used such as

zsh,csh,tcsh,powershell, and others.

Tuesday, November 19, 2019

Friday, November 1, 2019

Docker Commands

Set Environment Settings:

- DOCKER_CERT_PATH=C:\Users\jini\.docker\machine\certs

- DOCKER_HOST=tcp://192.168.99.100:2376

- DOCKER_TLS_VERIFY=1

- DOCKER_TOOLBOX_INSTALL_PATH= C:\Program Files\Docker Toolbox

Docker Lifecycle:

docker runcreates a container.docker stopstops it.docker startwill start it again.docker restartrestarts a container.docker rmdeletes a container.docker killsends a SIGKILL to a container.docker attachwill connect to a running container.docker waitblocks until container stops.

docker start then docker attach to get in. If you want to poke around in an image,

docker run -t -i to open a tty.Docker Info:

docker ps-a shows running and stopped containers.docker inspectlooks at all the info on a container (including IP address).docker logsgets logs from container.docker eventsgets events from container.docker portshows public facing port of container.docker topshows running processes in container.docker diffshows changed files in the container’s FS.

Docker Images/Container Lifecycle:

docker imagesshows all images.docker importcreates an image from a tarball.docker buildcreates image from Dockerfile.docker commitcreates image from a container.docker rmiremoves an image.docker insertinserts a file from URL into image. (kind of odd, you’d think images would be immutable after create)docker loadloads an image from a tar archive as STDIN, including images and tags (as of 0.7).docker savesaves an image to a tar archive stream to STDOUT with all parent layers, tags & versions (as of 0.7).

Info

docker historyshows history of image.docker tagtags an image to a name (local or registry).

Docker Compose

Define and run multi-container applications with Docker.docker-compose --helpcreate docker-compose.yml

version: '3'

services:

eureka:

restart: always

build: ./micro1-eureka-server

ports:

- "8761:8761"docker-compose stop

docker-compose rm -f

docker-compose build

docker-compose up -d

docker-compose start

docker-compose psScaling containers running a given service

docker-compose scale eureka=3Healing, i.e., re-running containers that have stopped

docker-compose up --no-recreate

Docker Hub

Docker.io hosts its own index to a central registry which contains a large number of repositories.docker loginto login to a registry.docker searchsearches registry for image.docker pullpulls an image from registry to local machine.docker pushpushes an image to the registry from local machine.

Dockerfile

Instructions

- .dockerignore

- FROM Sets the Base Image for subsequent instructions.

- MAINTAINER (deprecated - use LABEL instead)

- RUN execute any commands in a new layer on top of the current image

- CMD provide defaults for an executing container.

- EXPOSE informs Docker that the container listens on the specified network ports at runtime.

- ENV sets environment variable.

- ADD copies new files, directories or remote file to container. Invalidates caches.

Avoid ADD and use COPY instead. - COPY copies new files or directories to container. By default this copies as root regardless of the USER/WORKDIR settings. Use --chown=

: to give ownership to another user/group. (Same for ADD.) - ENTRYPOINT configures a container that will run as an executable.

- VOLUME creates a mount point for externally mounted volumes or other containers.

- USER sets the user name for following RUN / CMD / ENTRYPOINT commands.

- WORKDIR sets the working directory.

- ARG defines a build-time variable.

- ONBUILD adds a trigger instruction when the image is used as the base for another build.

- STOPSIGNAL sets the system call signal that will be sent to the container to exit.

- LABEL apply key/value metadata to your images, containers, or daemons.

Tutorial: Flux7’s Dockerfile Tutorial

Examples: Examples

Best Practices: Best to look at http://github.com/wsargent/docker-devenv and the best practices / take 2 for more details.

Volumes:

Docker volumes are free-floating filesystems. They don’t have to be connected to a particular container.

Volumes are useful in situations where you can’t use links (which are TCP/IP only). For instance, if you need to have two docker instances communicate by leaving stuff on the filesystem.

You can mount them in several docker containers at once, using docker run -volume-from

Get Environment Settings

docker run --rm ubuntu env

Delete old containers

docker ps -a | grep 'weeks ago' | awk '{print $1}' | xargs docker rm

Delete stopped containers

docker rm `docker ps -a -q`

Show image dependencies

docker images -viz | dot -Tpng -o docker.png

Original

https://github.com/wsargent/docker-cheat-sheet/blob/master/README.md

Monday, June 12, 2017

Portainer and UI for Docker infra

Installation Portainer:

- You need as pre-requisite to have docker and docker swarm installed

- Then just execute the following command to install the Portainer container

docker run -d -p 9000:9000 portainer/portainer - To run portainer on a local instance of the docker engine use the following command :

docker run -d -p 9000:9000 -v /var/run/docker.sock:/var/run/docker.sock portainer/portainer - A public demo is available at => demo.portainer.ioMore deployments options:

username admin, password tryportainer

After the password definition, you get a login where you enter the new password.

The next step is, to define the first endpoint, which is guided:

- Here you provide a name (which is shown in the UI), the IP-Address of the docker-machine with the port (you get it from the $env:DOCKER_HOST environment variable).

- The docker-machine is secured by TLS, so you have to provide the certificates.

The files can be found under your home directory:

C:\Users\\.docker\machine\certs

- With this UI, you can do a lot of basic stuff on the docker-machine like starting/stopping/killing containers. Pulling Images, maintaining the network part and a lot more.

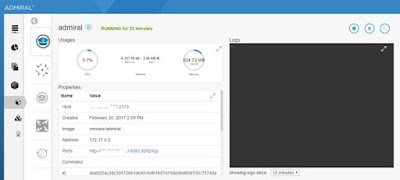

Admiral - Management UI for Containers (Docker):

VMware Admiral is a highly scalable and very lightweight Container Management platform for deploying and managing container based applications. It is designed to have a small footprint and boot extremely quickly. Admiral is intended to provide automated deployment and life-cycle management of containers.

- Rule-based resource management - Setup your deployment preferences to let Admiral manage container placement.

- Live state updates - Provides a live view of your system.

- Efficient multi-container template management - Enables logical multi-container application deployments.

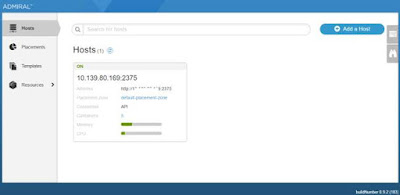

Run following command

docker run -d -p 8282:8282 --name admiral vmware/admiral

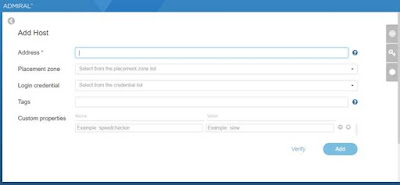

Click on Add a Host

Before you add host please ensure Remote API is enabled for the host.

Please refer my previous blog - http://bit.ly/2kRQqOc

Fill in details and add the host

- Address – http://:2375

- Placement Zone – Choose default-placementzone

- Login Credentials – you will need to create new and choose that

Now you can manage containers or create new if required.

Following the Admiral Container which we created

For more information refer official Github link - Click Here

UI's for Docker:

As you can see, at the top we have the Docker Engine which would be running on a single host.

You then have your orchestration/scheduler interacting, with typically, several Docker Engines.

- Green; this is a command line tool / interface.

- Blue; Web Interface, this is what we are going to be covering in the post.

- Purple; Desktop App, I have included this as it is typically part of peoples journey into using Docker.

Saturday, June 10, 2017

Docker Swarm Tutorial

Docker Swarm is the Docker native clustering solution, which can turn a

group of distributed,

docker hosts into a single large virtual server.

- Docker Swarm provides the standard Docker API, and it can communicate with any tool that already works with Docker daemon allowing easy scaling to multiple hosts

- In docker swarm you create one or more managers and worker machines in the cluster .. the manager(s) take care of the orchestration of your deployed services (e.g., Creation/Replication/Assigning tasks to nodes/load balancing/service discovery )

- docker run --rm swarm --help

docker-machine create --driver virtualbox --virtualbox-memory "3000" master

docker-machine create --driver virtualbox --virtualbox-memory "3000" worker1

docker-machine create --driver virtualbox --virtualbox-memory "3000" worker2

PS C:\Program Files\Docker Toolbox> docker-machine ls

NAME ACTIVE DRIVER STATE URL SWARM DOCKER ERRORS

master - virtualbox Stopped Unknown

worker1 - virtualbox Stopped Unknown

worker2 - virtualbox Stopped UnknownPS C:\Program Files\Docker Toolbox> docker-machine start master

PS C:\Program Files\Docker Toolbox> docker-machine ip master

192.168.99.101

PS C:\Program Files\Docker Toolbox> docker-machine ssh master

## .

## ## ## ==

## ## ## ## ## ===

/"""""""""""""""""\___/ ===

~~~ {~~ ~~~~ ~~~ ~~~~ ~~~ ~ / ===- ~~~

\______ o __/

\ \ __/

\____\_______/

_ _ ____ _ _

| |__ ___ ___ | |_|___ \ __| | ___ ___| | _____ _ __

| '_ \ / _ \ / _ \| __| __) / _` |/ _ \ / __| |/ / _ \ '__|

| |_) | (_) | (_) | |_ / __/ (_| | (_) | (__| < __/ |

|_.__/ \___/ \___/ \__|_____\__,_|\___/ \___|_|\_\___|_|

Boot2Docker version 17.05.0-ce, build HEAD : 5ed2840 - Fri May 5 21:04:09 UTC 2017

Docker version 17.05.0-ce, build 89658be

docker@master:~$ exitPS C:\Program Files\Docker Toolbox>docker-machine ssh masterdocker swarm init --advertise-addr 192.168.99.101docker@master:~$

The below swarm join command is always generated after init swarm command above (include token).

In order to run on both workers I will have to SSH to each one run the command then exit.

docker-machine ssh worker1 docker swarm join\ --token SWMTKN-1-41e2y83z8t462u4039lk0ugfwnuot5vqzbiv82ug6eadtbnbhl-2lfjygo3ic0nrnhjijffxhy47 192.168.99.101:2377 exit

To add a manager to this swarm, run 'docker swarm join-token manager' and followdocker-machine ssh worker2docker swarm join \ --token SWMTKN-1-41e2y83z8t462u4039lk0ugfwnuot5vqzbiv82ug6eadtbnbhl-2lfjygo3ic0nrnhjijffxhy47 192.168.99.101:2377 exit

Step 5: Display all cluster nodes configured

PS C:\Program Files\Docker Toolbox> docker-machine ssh master

docker@master:~$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS

63izegp4x3to7cgoao1j9uqqg * master Ready Active Leader

svamypaqkur7ai3je5ob2z78t worker2 Ready Active

whopzq6ywt2eakrend8ji3hb8 worker1 Ready ActiveStep 6: Create a network to make our services visible to each other

docker@master:~$ docker network create -d overlay my_networkI've pushed both images to my docker hub account and now I will pull/run them on the cluster.

docker tag bipin:eureka bipingupta007/eureka

docker push bipingupta007/eureka

docker tag bipin:config bipingupta007/config

docker push bipingupta007/config

docker@master:~$docker service create -p 8761:8761 --name eureka --network my_network bipingupta007/eurekadocker@master:~$docker service create -p 8888:8888 --name config --network my_network bipingupta007/config

- Here I've just deployed the services with 1 replicas .. however I could have used --replicas parameter to set the number of replicas of the service.

- You can also see that I've exposed the ports and also specified the network to make sure they can access each other using the service name

docker@master:~$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

5t42nzl8bvo0 eureka replicated 2/2 bipingupta007/eureka:latest *:8761->8761/tcp

uffvcped9b5o config replicated 1/1 bipingupta007/config:latest *:8888->8888/tcpStep 9: Display tasks of a service

docker@master:~$ docker service ps eureka

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

cvppczf7wgfw eureka.1 bipingupta007/eureka:latest worker1 Running Running 2 minutes agoAs I've mentioned before I could have set the number of replicas of a service at creation time .. now since I've already started it lets scale one of them to run 5 replicas.

docker@master:~$docker@master:~$docker@master:~$docker service ps eurekaID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS cvppczf7wgfw eureka.1 bipingupta007/eureka:latest worker1 Running Running 8 minutes ago 86vuhj694evg eureka.2 bipingupta007/eureka:latest master Running Running about a minute ago a6oi3gfq9pcv eureka.3 bipingupta007/eureka:latest worker2 Running Running about a minute ago

Of course you can either scale up or down.

Step 11: Remove the serviceand verify that the service was removed.docker service rm eurekadocker@master:~$docker service lsdocker@master:~$docker psdocker@master:~$

You can actually specify any of the cluster machine IPs not necessarily the manager.

http://192.168.99.101:8761/

Sunday, May 21, 2017

Continuous Delivery with Docker on Mesos and Marathon

Full functional development and continuous integration environments

- Here is the full docker-compose.yml file that includes all parts of the system.

- In addition to Jenkins and Docker registry we have Mesos master, single Mesos slave, Mesosphere Marathon and Zookeeper for internal Mesos communication.

===============================================

version: '3'

services:

zookeeper:

image: garland/zookeeper

hostname: "zookeeper"

container_name: zookeeper

ports:

- "2181:2181"

- "2888:2888"

- "3888:3888"

mesos-master:

image: garland/mesosphere-docker-mesos-master

hostname: "mesos-master"

container_name: mesos-master

environment:

- MESOS_QUORUM=1

- MESOS_ZK=zk://192.168.99.100:2181/mesos

- MESOS_REGISTRY=in_memory

- MESOS_LOG_DIR=C:/var/log/mesos

- MESOS_WORK_DIR=C:/var/lib/mesos

links:

- zookeeper:zk

ports:

- "5050:5050"

mesos-slave:

image: garland/mesosphere-docker-mesos-master:latest

hostname: "mesos-slave-1"

container_name: mesos-slave-1

environment:

- MESOS_HOSTNAME=192.168.99.100

- MESOS_MASTER=zk://192.168.99.100:2181/mesos

- MESOS_LOG_DIR=C:/var/log/mesos

- MESOS_LOGGING_LEVEL=INFO

entrypoint: mesos-slave

links:

- zookeeper:zk

- mesos-master:master

ports:

- "5151:5151"

marathon:

image: garland/mesosphere-docker-marathon

hostname: "marathon"

container_name: marathon

environment:

- MARATHON_MASTER=zk://192.168.99.100:2181/mesos

- MARATHON_ZK=zk://192.168.99.100:2181/marathon

command: --master zk://zk:2181/mesos --zk zk://zk:2181/marathon

links:

- zookeeper:zk

- mesos-master:master

ports:

- "8080:8080"

###Environment

===============================================

It is important to note that the Jenkins container now includes a link to Marathon. This is required to be able to post the requests from the Jenkins container to the Marathon container.

Now we can restart the system and see it all up and running:

docker-compose up

A quick introduction to Apache Mesos

Apache Mesos is a centralised

fault-tolerant cluster manager. It’s designed for distributed computing

environments to provide resource isolation and management across a

cluster of slave nodes.

In some ways, Mesos provides the opposite to virtualization:

A Mesos cluster is made up of four major components:

Apache ZooKeeper is a centralized configuration manager, used by distributed applications such as Mesos to coordinate activity across a cluster. Mesos uses ZooKeeper to elect a leading master and for slaves to join the cluster.

In some ways, Mesos provides the opposite to virtualization:

- Virtualization splits a single physical resource into multiple virtual resources

- Mesos joins multiple physical resources into a single virtual resource

A Mesos cluster is made up of four major components:

- ZooKeeper

- Mesos masters

- Mesos slaves

- Frameworks

Apache ZooKeeper is a centralized configuration manager, used by distributed applications such as Mesos to coordinate activity across a cluster. Mesos uses ZooKeeper to elect a leading master and for slaves to join the cluster.

Mesos masters

A Mesos master is a Mesos instance in control of the cluster.A cluster will typically have multiple Mesos masters to provide fault-tolerance, with one instance elected the leading master.

Mesos slaves

A Mesos slave is a Mesos instance which offers resources to the cluster.

They are the ‘worker’ instances - tasks are allocated to the slaves by the Mesos master.

Frameworks:

A Mesos slave is a Mesos instance which offers resources to the cluster.

They are the ‘worker’ instances - tasks are allocated to the slaves by the Mesos master.

Frameworks:

- On its own, Mesos only provides the basic “kernel” layer of your cluster. It lets other applications request resources in the cluster to perform tasks, but does nothing itself.

- Frameworks bridge the gap between the Mesos layer and your applications. They are higher level abstractions which simplify the process of launching tasks on the cluster.

- Chronos is a cron-like fault-tolerant scheduler for a Mesos cluster. You can use it to schedule jobs, receive failure and completion notifications, and trigger other dependent jobs.

-

Marathon

Marathon is the equivalent of the Linux upstart or init daemons, designed for long-running applications. You can use it to start, stop and scale applications across the cluster.

Others :

There are a few other frameworks:

The quick start guide

Using Vagrant

Vagrant and the vagrant-mesos Vagrant file can help you quickly build:

Unfortunately, the network configuration is a bit difficult to work with - it uses a private network between the VMs, and SSH tunnelling to provide access to the cluster.

Using Mesosphere and Amazon Web Services

Mesosphere provide Elastic Mesosphere, which can quickly launch a Mesos cluster using Amazon EC2.

Vagrant files to build individual components of a Mesos cluster. It’s a work in progress, but it can already build a working Mesos cluster without the networking issues. It uses bridged networking, with dynamically assigned IPs, so all instances can be accessed directly through your local network.

You’ll need the following GitHub repositories:

ian-kent/vagrant-zookeeper

ian-kent/vagrant-mesos-master

ian-kent/vagrant-mesos-slave

At the moment, a cluster is limited to one ZooKeeper, but can support multiple Mesos masters and slaves.

Each of the instances is also built with Serf to provide decentralized service discovery. You can use serf members from any instance to list all other instances.

To help test deployments, there’s also a MongoDB build with Serf installed:

ian-kent/vagrant-mongodb

Like the ZooKeeper instances, the MongoDB instance joins the same Serf cluster but isn’t part of the Mesos cluster.

Using Vagrant

Vagrant and the vagrant-mesos Vagrant file can help you quickly build:

- a standalone Mesos instance

- a multi-machine Mesos cluster of ZooKeepers, masters and slaves

Unfortunately, the network configuration is a bit difficult to work with - it uses a private network between the VMs, and SSH tunnelling to provide access to the cluster.

Using Mesosphere and Amazon Web Services

Mesosphere provide Elastic Mesosphere, which can quickly launch a Mesos cluster using Amazon EC2.

Vagrant files to build individual components of a Mesos cluster. It’s a work in progress, but it can already build a working Mesos cluster without the networking issues. It uses bridged networking, with dynamically assigned IPs, so all instances can be accessed directly through your local network.

You’ll need the following GitHub repositories:

ian-kent/vagrant-zookeeper

ian-kent/vagrant-mesos-master

ian-kent/vagrant-mesos-slave

At the moment, a cluster is limited to one ZooKeeper, but can support multiple Mesos masters and slaves.

Each of the instances is also built with Serf to provide decentralized service discovery. You can use serf members from any instance to list all other instances.

To help test deployments, there’s also a MongoDB build with Serf installed:

ian-kent/vagrant-mongodb

Like the ZooKeeper instances, the MongoDB instance joins the same Serf cluster but isn’t part of the Mesos cluster.

Once your cluster is running

You’ll need to install a framework.

Mesosphere lets you choose to install Marathon on Amazon EC2, so that could be a good place to start.

With Marathon or Aurora, you can even run other frameworks in the Mesos cluster for scalability and fault-tolerance.

Monday, December 28, 2015

Subscribe to:

Posts (Atom)