What is Flume?

Apache Flume is a tool/service/data ingestion mechanism for

collecting aggregating and transporting large amounts of streaming data

such as log files, events (etc...) from various sources to a centralized

data store

Advantages of Flume

Here are the advantages of using Flume −

- Using Apache Flume we can store the data in to any of the centralized stores (HBase, HDFS).

- When the rate of incoming data exceeds the rate at which data can be written to the destination, Flume acts as a mediator between data producers and the centralized stores and provides a steady flow of data between them.

- Flume provides the feature of contextual routing.

- The transactions in Flume are channel-based where two transactions (one sender and one receiver) are maintained for each message. It guarantees reliable message delivery.

- Flume is reliable, fault tolerant, scalable, manageable, and customizable.

Apache Flume - Architecture

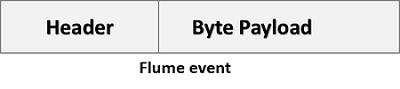

An event is the basic unit of the data transported inside Flume.

It contains a payload of byte array that is to be transported from the

source to the destination accompanied by optional headers.

A typical

Flume event would have the following structure −

-

Flume Agent

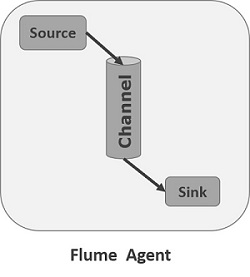

An agent is an independent daemon process (JVM) in Flume. It receives the data (events) from clients or other agents and forwards it to its next destination (sink or agent). Flume may have more than one agent. Following diagram represents a Flume Agent

A Flume Agent contains three main components namely, source, channel, & sink.

-

Source

A source is the component of an agent which receives data from the data generators and transfers it to one or more channels in the form of Flume events.

Example − Avro source, Thrift source, Twitter 1% source etc. -

Channel

A channel is a transient store which receives the events from the source and buffers them till they are consumed by sinks. It acts as a bridge between the sources and the sinks.

Example − JDBC channel, File system channel, Memory channel, etc. -

Sink

A sink stores the data into centralized stores like HBase and HDFS. It consumes the data (events) from the channels and delivers it to the destination.

Example − HDFS sink

Note :- A flume agent can have multiple sources, sinks and channels.

Additional Components of Flume Agent

A few more components that play a vital role in transferring the events from the data generator to the centralized stores.

- Interceptors

Interceptors are used to alter/inspect flume events which are transferred between source and channel. -

Channel Selectors

These are used to determine which channel is to be opted to transfer the data in case of multiple channels. There are two types of channel selectors −

Default channel selectors − These are also known as replicating channel selectors they replicates all the events in each channel.

Multiplexing channel selectors − These decides the channel to send an event based on the address in the header of that event. -

Sink Processors

These are used to invoke a particular sink from the selected group of sinks. These are used to create fail over paths for your sinks or load balance events across multiple sinks from a channel.

Multi-hop Flow

Within Flume, there can be multiple agents and before reaching the final destination, an event may travel through more than one agent. This is known as multi-hop flow.

Fan-out Flow

The data flow from one source to multiple channels is known as fan-out flow. It is of two types −

- Replicating − The data flow where the data will be replicated in all the configured channels.

- Multiplexing − The data flow where the data will be sent to a selected channel which is mentioned in the header of the event.

Fan-in Flow

- The data flow in which the data will be transferred from many sources to one channel is known as fan-in flow.

Flume example using netcat(source) and logger(sink):

# START example.conf file : A single-node Flume configuration# Name the components on this AGENT

a1.sources = r1

a1.channels = c1

a1.sinks = k1

# Configure the SOURCE

a1.sources.r1.type = netcat

a1.sources.r1.bind = localhost

a1.sources.r1.port = 44444

# Use a CHANNEL which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Configure the SINK

a1.sinks.k1.type = logger

# Bind the SOURCE and SINK to the CHANNEL

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

# END example.conf file

########## RUNNING FLUME AGENT ##########

# flume-ng agent --conf conf --conf-file example.conf --name a1

######## RUNNING DATA GENERATOR #########

# $ telnet localhost 44444

# Hello World!