- An agent which normally runs on each server you wish to harvest logs from.

- Its job is to read the logs (e.g. from the filesystem), normalise them (e.g. common timestamp format), optionally extract structured data from them (e.g. session IDs, resource paths, etc.) and finally push them into elasticsearch.

- ElasticSearch is a search engine with focus on real-time and analysis of the data it holds.

- It is document-oriented/based and you can store everything you want as JSON. This makes it powerful, simple and flexible.

- It is build on top of Apache Lucene, and is on default running on port 9200 +1 per node.

- PLUGIN :

Note :- Install the following plugin by executing following command for GUI in ES.

.\bin\plugin install mobz/elasticsearch-head

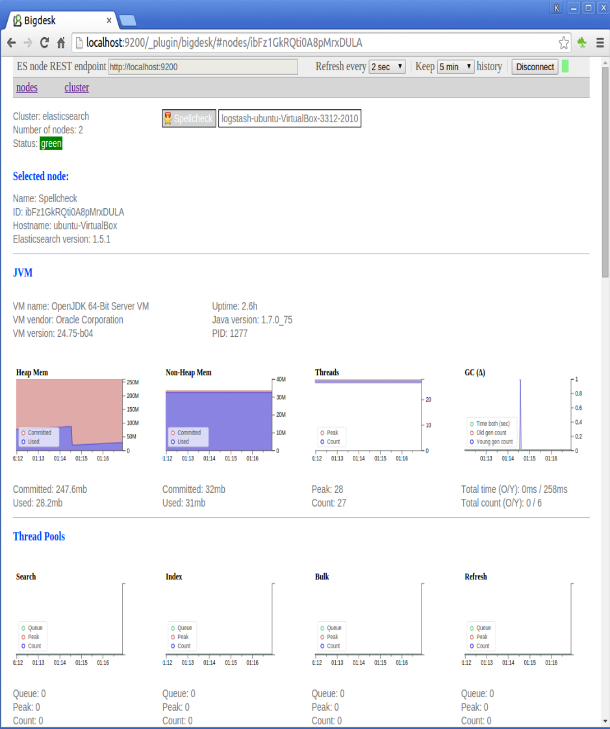

.\bin\plugin install lukas-vlcek/bigdesk

.\bin\plugin install royrusso/elasticsearch-HQ

.\bin\plugin install lmenezes/elasticsearch-kopf

Hit http://localhost:9200/_plugin/head/to see Elastic GUI.

Hit http://localhost:9200/_plugin/bigdesk/ to see Elasticsearch Health.

KIBANA :

- A browser-based interface served up from a web server.

- It’s job is to allow you to build tabular and graphical visualizations of the log data based on elasticsearch queries. Typically these are based on simple text queries, time-ranges or even far more complex aggregations.

- A server would get started and you could see the GUI at http://localhost:5601/

SHIPPERS:

Filebeat is for shipping log files to Logstash.

Packetbeat is for analyzing your network data.

Topbeat is for getting infrastructure information such as cpu and memory usage.

Winlogbeat is for shipping windows event logs.

Packetbeat is for analyzing your network data.

Topbeat is for getting infrastructure information such as cpu and memory usage.

Winlogbeat is for shipping windows event logs.

Service manager:

NSSM: https://nssm.cc/release/nssm-2.24.zip

NSSM: https://nssm.cc/release/nssm-2.24.zip

LOGSTASH CONF FILE:

input {

file {

type => "apache-access"

path => "D:/access.log"

}

file {

type => "apache-error"

path => "D:/error.log"

}

}

output {

# Emit events to stdout for easy debugging of what is going through

# logstash.

stdout { }

# This elasticsearch output will try to autodiscover a near-by

# elasticsearch cluster using multicast discovery.

# If multicast doesn't work, you'll need to set a 'host' setting.

elasticsearch { }

}

file {

type => "apache-access"

path => "D:/access.log"

}

file {

type => "apache-error"

path => "D:/error.log"

}

}

output {

# Emit events to stdout for easy debugging of what is going through

# logstash.

stdout { }

# This elasticsearch output will try to autodiscover a near-by

# elasticsearch cluster using multicast discovery.

# If multicast doesn't work, you'll need to set a 'host' setting.

elasticsearch { }

}

SAMPLE ERROR LOG FILE :

[Fri Dec 16 01:46:23 2005] [error] [client 1.2.3.4] Directory index forbidden by rule: /home/test/

[Fri Dec 16 01:54:34 2005] [error] [client 1.2.3.4] Directory index forbidden by rule: /apache/web-data/test2

[Fri Dec 16 02:25:55 2005] [error] [client 1.2.3.4] Client sent malformed Host header

[Mon Dec 19 23:02:01 2005] [error] [client 1.2.3.4] user test: authentication failure for "/~dcid/test1": Password Mismatch

[Sat Aug 12 04:05:51 2006] [notice] Apache/1.3.11 (Unix) mod_perl/1.21 configured -- resuming normal operations

[Thu Jun 22 14:20:55 2006] [notice] Digest: generating secret for digest authentication ...

[Thu Jun 22 14:20:55 2006] [notice] Digest: done

[Thu Jun 22 14:20:55 2006] [notice] Apache/2.0.46 (Red Hat) DAV/2 configured -- resuming normal operations

[Sat Aug 12 04:05:49 2006] [notice] SIGHUP received. Attempting to restart

[Sat Aug 12 04:05:51 2006] [notice] suEXEC mechanism enabled (wrapper: /usr/local/apache/sbin/suexec)

[Sat Jun 24 09:06:22 2006] [warn] pid file /opt/CA/BrightStorARCserve/httpd/logs/httpd.pid overwritten -- Unclean shutdown of previous Apache run?

[Sat Jun 24 09:06:23 2006] [notice] Apache/2.0.46 (Red Hat) DAV/2 configured -- resuming normal operations

[Sat Jun 24 09:06:22 2006] [notice] Digest: generating secret for digest authentication ...

[Sat Jun 24 09:06:22 2006] [notice] Digest: done

[Thu Jun 22 11:35:48 2006] [notice] caught SIGTERM, shutting down

[Tue Mar 08 10:34:21 2005] [error] (11)Resource temporarily unavailable: fork: Unable to fork new process

[Tue Mar 08 10:34:31 2005] [error] (11)Resource temporarily unavailable: fork: Unable to fork new process

[Fri Dec 16 01:54:34 2005] [error] [client 1.2.3.4] Directory index forbidden by rule: /apache/web-data/test2

[Fri Dec 16 02:25:55 2005] [error] [client 1.2.3.4] Client sent malformed Host header

[Mon Dec 19 23:02:01 2005] [error] [client 1.2.3.4] user test: authentication failure for "/~dcid/test1": Password Mismatch

[Sat Aug 12 04:05:51 2006] [notice] Apache/1.3.11 (Unix) mod_perl/1.21 configured -- resuming normal operations

[Thu Jun 22 14:20:55 2006] [notice] Digest: generating secret for digest authentication ...

[Thu Jun 22 14:20:55 2006] [notice] Digest: done

[Thu Jun 22 14:20:55 2006] [notice] Apache/2.0.46 (Red Hat) DAV/2 configured -- resuming normal operations

[Sat Aug 12 04:05:49 2006] [notice] SIGHUP received. Attempting to restart

[Sat Aug 12 04:05:51 2006] [notice] suEXEC mechanism enabled (wrapper: /usr/local/apache/sbin/suexec)

[Sat Jun 24 09:06:22 2006] [warn] pid file /opt/CA/BrightStorARCserve/httpd/logs/httpd.pid overwritten -- Unclean shutdown of previous Apache run?

[Sat Jun 24 09:06:23 2006] [notice] Apache/2.0.46 (Red Hat) DAV/2 configured -- resuming normal operations

[Sat Jun 24 09:06:22 2006] [notice] Digest: generating secret for digest authentication ...

[Sat Jun 24 09:06:22 2006] [notice] Digest: done

[Thu Jun 22 11:35:48 2006] [notice] caught SIGTERM, shutting down

[Tue Mar 08 10:34:21 2005] [error] (11)Resource temporarily unavailable: fork: Unable to fork new process

[Tue Mar 08 10:34:31 2005] [error] (11)Resource temporarily unavailable: fork: Unable to fork new process

SAMPLE ACCESS LOG FILE :

192.168.2.20 - - [28/Jul/2006:10:27:10 -0300] "GET /cgi-bin/try/ HTTP/1.0" 200 3395

127.0.0.1 - - [28/Jul/2006:10:22:04 -0300] "GET / HTTP/1.0" 200 2216

127.0.0.1 - - [28/Jul/2006:10:27:32 -0300] "GET /hidden/ HTTP/1.0" 404 7218

x.x.x.90 - - [13/Sep/2006:07:01:53 -0700] "PROPFIND /svn/[xxxx]/Extranet/branches/SOW-101 HTTP/1.1" 401 587

x.x.x.90 - - [13/Sep/2006:07:01:51 -0700] "PROPFIND /svn/[xxxx]/[xxxx]/trunk HTTP/1.1" 401 587

x.x.x.90 - - [13/Sep/2006:07:00:53 -0700] "PROPFIND /svn/[xxxx]/[xxxx]/2.5 HTTP/1.1" 401 587

x.x.x.90 - - [13/Sep/2006:07:00:53 -0700] "PROPFIND /svn/[xxxx]/Extranet/branches/SOW-101 HTTP/1.1" 401 587

x.x.x.90 - - [13/Sep/2006:07:00:21 -0700] "PROPFIND /svn/[xxxx]/[xxxx]/trunk HTTP/1.1" 401 587

x.x.x.90 - - [13/Sep/2006:06:59:53 -0700] "PROPFIND /svn/[xxxx]/[xxxx]/2.5 HTTP/1.1" 401 587

x.x.x.90 - - [13/Sep/2006:06:59:50 -0700] "PROPFIND /svn/[xxxx]/[xxxx]/trunk HTTP/1.1" 401 587

x.x.x.90 - - [13/Sep/2006:06:58:52 -0700] "PROPFIND /svn/[xxxx]/[xxxx]/trunk HTTP/1.1" 401 587

x.x.x.90 - - [13/Sep/2006:06:58:52 -0700] "PROPFIND /svn/[xxxx]/Extranet/branches/SOW-101 HTTP/1.1" 401 587

START ELASTICSEARCH:

127.0.0.1 - - [28/Jul/2006:10:22:04 -0300] "GET / HTTP/1.0" 200 2216

127.0.0.1 - - [28/Jul/2006:10:27:32 -0300] "GET /hidden/ HTTP/1.0" 404 7218

x.x.x.90 - - [13/Sep/2006:07:01:53 -0700] "PROPFIND /svn/[xxxx]/Extranet/branches/SOW-101 HTTP/1.1" 401 587

x.x.x.90 - - [13/Sep/2006:07:01:51 -0700] "PROPFIND /svn/[xxxx]/[xxxx]/trunk HTTP/1.1" 401 587

x.x.x.90 - - [13/Sep/2006:07:00:53 -0700] "PROPFIND /svn/[xxxx]/[xxxx]/2.5 HTTP/1.1" 401 587

x.x.x.90 - - [13/Sep/2006:07:00:53 -0700] "PROPFIND /svn/[xxxx]/Extranet/branches/SOW-101 HTTP/1.1" 401 587

x.x.x.90 - - [13/Sep/2006:07:00:21 -0700] "PROPFIND /svn/[xxxx]/[xxxx]/trunk HTTP/1.1" 401 587

x.x.x.90 - - [13/Sep/2006:06:59:53 -0700] "PROPFIND /svn/[xxxx]/[xxxx]/2.5 HTTP/1.1" 401 587

x.x.x.90 - - [13/Sep/2006:06:59:50 -0700] "PROPFIND /svn/[xxxx]/[xxxx]/trunk HTTP/1.1" 401 587

x.x.x.90 - - [13/Sep/2006:06:58:52 -0700] "PROPFIND /svn/[xxxx]/[xxxx]/trunk HTTP/1.1" 401 587

x.x.x.90 - - [13/Sep/2006:06:58:52 -0700] "PROPFIND /svn/[xxxx]/Extranet/branches/SOW-101 HTTP/1.1" 401 587

START ELASTICSEARCH:

.\bin\elasticsearch.bat

Checking Elasticsearch => http://localhost:9200/ =>> http://localhost:9200/_plugin/head/

Checking Elasticsearch => http://localhost:9200/ =>> http://localhost:9200/_plugin/head/

START LOGSTASH AGENT:

.\bin\logstash agent -f logstash.conf

START KIBANA:

.\bin\kibana.bat

Checking Kibana=> http://localhost:5601

NOTE: Copy & Paste log files (access & error log) in (D:\) directory.

No comments:

New comments are not allowed.